We often get asked a recurring question while promoting Agentic AI: How should we integrate with MCP (Model Context Protocol)? Which MCP Servers are recommended? In this article, I want to address some questions that tend to be overlooked but are actually more important—for example, why do we need MCP in the first place? What features does MCP offer, and what pain points does it resolve? Why is MCP so popular? When does it make sense to use MCP, and when should we avoid it?

The Pain of Tool Integration

If you’ve ever developed an Agent based on LLM APIs, you’ve likely encountered this frustrating issue: each LLM has a different API format for “tool (Agent) invocation.” For instance, OpenAI’s API uses JSON to describe the name, function, and parameters of a tool. Anthropic’s API looks somewhat similar on the surface, but it imposes additional requirements on reasoning and security, which means code you directly copy from GPT might fail to run properly on Claude. Gemini, on the other hand, takes a completely different approach/format.

Thus, to ensure Agentic AI systems remain compatible and flexible across multiple LLMs, you often need to build a specialized abstraction layer for each provider. This is quite a heavy investment in commercial product development and can significantly slow down deployment and iteration. As a result, whether in open-source projects or in commercial companies, people have been working on building a single interface to abstract the portion of tool invocation that sits between the Agent user layer and the LLM API layer. This way, developers can “write once, run anywhere,” and deploy a single Agent across all popular LLM APIs without changing their code. It’s like introducing a USB-C interface into the Agent world, which could drastically drive the field forward and boost everyone’s development efficiency.

But various companies are not merely interested in pushing technical progress here. They also see significant business opportunities. For example, once Microsoft established itself as the de facto standard for PC operating systems, it wielded tremendous clout and captured large profits in subsequent developments. Similarly, after Apple and Google built their App Store ecosystems, they made huge gains through revenue sharing. So if a single company can dominate how this kind of standard is set, it can embed its own agenda in the process. On one hand, the technology can be tightly integrated with that company’s own products, encouraging developers to adopt its ecosystem. On the other hand, they can turn standard certification into an ecosystem barrier and reap the resulting benefits. Conversely, for startups, if they can produce an open-source, lightweight, and user-friendly protocol standard, they can attract users who don’t want to be locked into big corporations and gain a foothold quickly.

This dual-sided competition is why the LLM tool protocol space has become a battleground of competing “fiefdoms,” with each player trying to stake an early claim. One of the earliest approaches was the ChatGPT Plugin (now discontinued by OpenAI) released in 2023, which used a manifest file and an OpenAPI-based approach to introduce tools and prompts to the LLM. Later on, LangChain introduced its own tool protocol, riding on its early ecosystem advantage and a wealth of components to attract a sizable user base. Meanwhile, Pydantic also introduced a Python Decorator-based protocol focusing on strong typing and a Pythonic style. Among these various protocols, the most well-known might be the Model Context Protocol (MCP). But why, amid such fierce competition, has MCP managed to quickly gain traction and win over many companies and developers? To understand this, we need to take a step back and examine the basic technical requirements a standard like this must fulfill.

Technical Advantages

From a purely technical standpoint, the essential requirement for a tool protocol is that it provides an abstraction layer so Agentic AI developers can “write once, run everywhere.” However, it can’t be overly abstract. LangChain has been notorious in this regard; people often complain about its intricate web of hundreds of abstractions, making it hard to figure out where changes need to be made. Additionally, the protocol needs to support enough features: if a developer discovers it lacks a necessary function, they’ll inevitably turn to a more compatible protocol. Lastly, it must be easy to debug, because in typical development processes, debugging can end up consuming far more time than the actual coding. Viewed through these lenses, the market currently has few strong contenders. For example:

- OpenAI Plugin is easy to start with—if you can write JSON, you’re set. But it’s tightly bound to ChatGPT, limiting extensibility.

- LangChain has a supportive ecosystem, but a high level of abstraction, making Agent-related logic overly complicated.

- Pydantic is great for a single Python project—it makes development a breeze—but everything is in a single library, and cross-language or cross-team usage isn’t as straightforward.

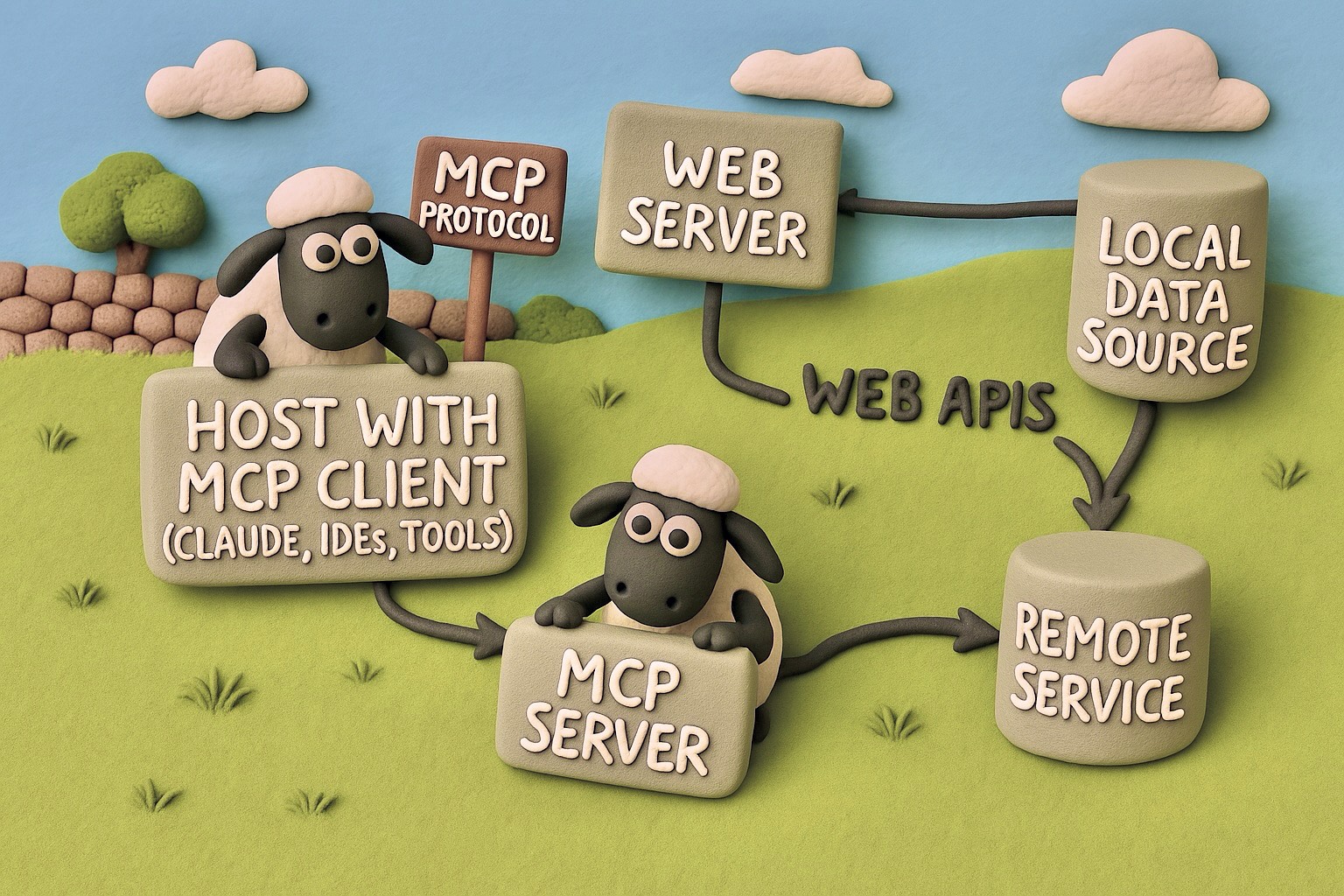

By contrast, MCP occupies a middle ground in these dimensions without any glaring weakness. For example, on the abstraction side, MCP feels more like a lightweight, low-level protocol that describes what resources exist for the model, which tools are available, what the prompt looks like, and how to invoke them. This strikes a reasonable balance between being too simplistic and too overloaded. On one hand, it smoothly connects with different types of services and resources; on the other, it avoids overreaching by trying to bundle higher-level features like multi-step reasoning or planning, preserving a simpler overall design. In terms of expressiveness, MCP supports resources, functions, prompt templates, and sampling (allowing tools to call back to the LLM), giving it decent extensibility. For ease of use and debug support, MCP offers multi-language SDKs and focuses on being AI-friendly, providing tools like llm.md to help newcomers quickly implement an MCP-compliant server or client. However, because it’s based on a server-client, multi-process, multi-service architecture, beginners will still have to consult protocol logs and interpret data formats when debugging. For small-scale needs, writing everything in a single Python script might still be simpler. Although the official Inspector tool is available, the learning curve is fairly high.

Business Factors

So, from a technical perspective, MCP isn’t the first product to tackle the pain point of standardizing LLM tool invocation, nor does it have a crushing lead in pure technology. It enjoys high visibility mainly thanks to three business-oriented factors, beyond simply having “no obvious drawbacks” on the technical side:

-

Anthropic’s Preparedness. Right at launch, they provided a relatively complete ecosystem: official documentation, sample code, multi-language SDKs, and native support for MCP in Claude Desktop. This meant that if you chose MCP, you’d get a reasonably streamlined unified abstraction tool, plus an out-of-the-box environment provided by Anthropic—no need to start from scratch. These features attracted a group of early adopters.

-

Promotion and Ecosystem Building. Anthropic worked diligently on marketing and push strategies. They heavily emphasized the openness of MCP, highlighting that it doesn’t depend on any specific framework or programming language. Meanwhile, they enlisted multiple partner companies to integrate MCP, quickly showcasing a number of real-world use cases, creating a powerful demonstration effect. This relates to the “compounding effect” we discussed in a previous article. From a developer’s perspective, writing an MCP implementation means instant access to a variety of platforms and tools, vastly increasing visibility and network effects—a highly tangible draw.

-

Anthropic’s Reputation and Commitment. Anthropic has a solid track record in the Agentic AI domain, especially with regard to multi-agent scenarios, so developers trust that the company has both forward-looking technology and deep resources. At the same time, Anthropic stresses that MCP is a fully open protocol, which boosts user confidence and encourages further buy-in.

These technical and non-technical factors combine to create the current popularity of MCP.

Where MCP Fits

Returning to our initial question—how do we integrate with MCP?—it’s crucial to note that many people’s interest in MCP isn’t about the protocol itself. They’re partially conflating it with “Agentic AI development,” assuming that to build Agentic AI, they first need a comprehensive framework. However, as we explained previously, that assumption isn’t necessarily valid right now. As we noted in other articles, the barrier to using Agentic AI is actually quite low. Even without any framework or protocol, you can still construct and iterate on Agents efficiently. For example, using Cursor, you just have to write a Python command line and provide natural language instructions on how to use the tool. In our specific example, we managed to build an Agent in about five minutes that added web-search functionality to Cursor.

From the analysis above, MCP arose mainly to ease the hassle of adapting to multiple LLMs—an important but narrower aim. That doesn’t necessarily tackle more pivotal commercial questions, such as finding product-market fit or solving core customer problems with AI. Put differently, MCP has limited relevance to those existential, high-level concerns that can decide the fate of a startup. Using MCP won’t magically unveil hidden user pain points or uncharted scenarios; it also doesn’t fix intractable technical hurdles overnight. In fact, debugging MCP can be more complex than regular programming, potentially slowing down development. Hence, whether or not to adopt MCP might not be a core decision in an Agent development process. It’s important, yes, but mainly in terms of long-term efficiency, not as a prerequisite for making an Agent.

Furthermore, because Agentic AI is evolving rapidly, MCP’s support across the ecosystem can vary in quality. For instance, Cursor’s process of integrating an MCP server has seen its share of hiccups, as you can tell from their release notes—bug fixes related to MCP keep popping up. Our general stance is: if you’re just learning Agentic AI or exploring potential user scenarios, there’s no rush to lock yourself into a specific technology. Instead, leverage the low entry barrier to Agentic AI and iterate fast on one or two LLMs. Once you confirm that customers actually see value in the product, you can have AI translate the code into MCP for easy adaptation across multiple LLMs. Meanwhile, MCP does offer real benefits for software distribution: many platforms, including Claude Desktop and Cursor, require only minimal changes to get up and running with MCP. Hence, MCP can be a mid- or late-stage accelerator for product iteration and distribution—just not something you necessarily need to adopt at the very beginning.

Conclusion

The current wave of competition around tool invocation protocols is still in a phase of flourishing diversity; it’s too early to say it’s settled. MCP may lead the pack right now, but that doesn’t mean it’s become the definitive standard. History shows that any company that secures a victory at the standards level can wield immense influence in the ensuing evolution of the ecosystem. Naturally, large enterprises will invest heavily in this domain, gradually steering the industry’s resources toward their own products and platforms. We may end up with a few major “camps” duking it out, similar to earlier eras of browser wars or OS rivalries.

For us developers, it’s crucial to focus on our own application requirements while keeping an eye on MCP and other protocols. Agentic AI technology evolves swiftly, and it’s entirely possible that a new solution will emerge in the near future that makes today’s protocols obsolete. Nevertheless, understanding how MCP works—and whether it truly advances your product’s goals—can help you make a more informed decision about when and why to integrate it.

Comments